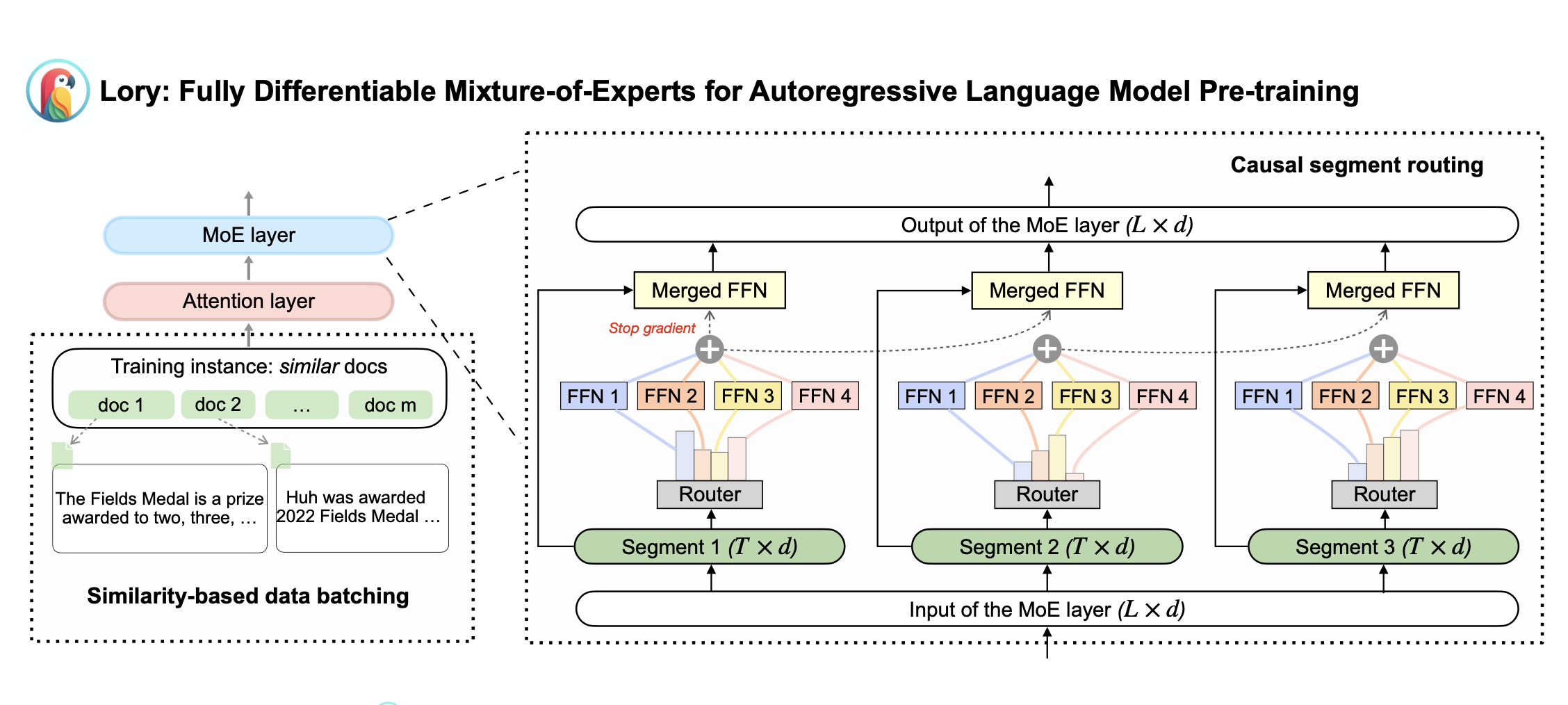

Lory: Fully Differentiable Mixture-of-Experts for Autoregressive Language Model Pre-training

COLM 2024

Zexuan Zhong

zzhong@princeton.edu

I am working at xAI. I am a core contributor to Grok-2, 3, and 4.

I built reward models for RLHF of Grok-3, and co-led the post-training RL training of Grok-4. Now, I focus on scaling up RL for Grok-next.

I completed my Ph.D. at Princeton University in 2024, advised by Prof. Danqi Chen. I received an M.S. from UIUC and a B.S. from Peking University.

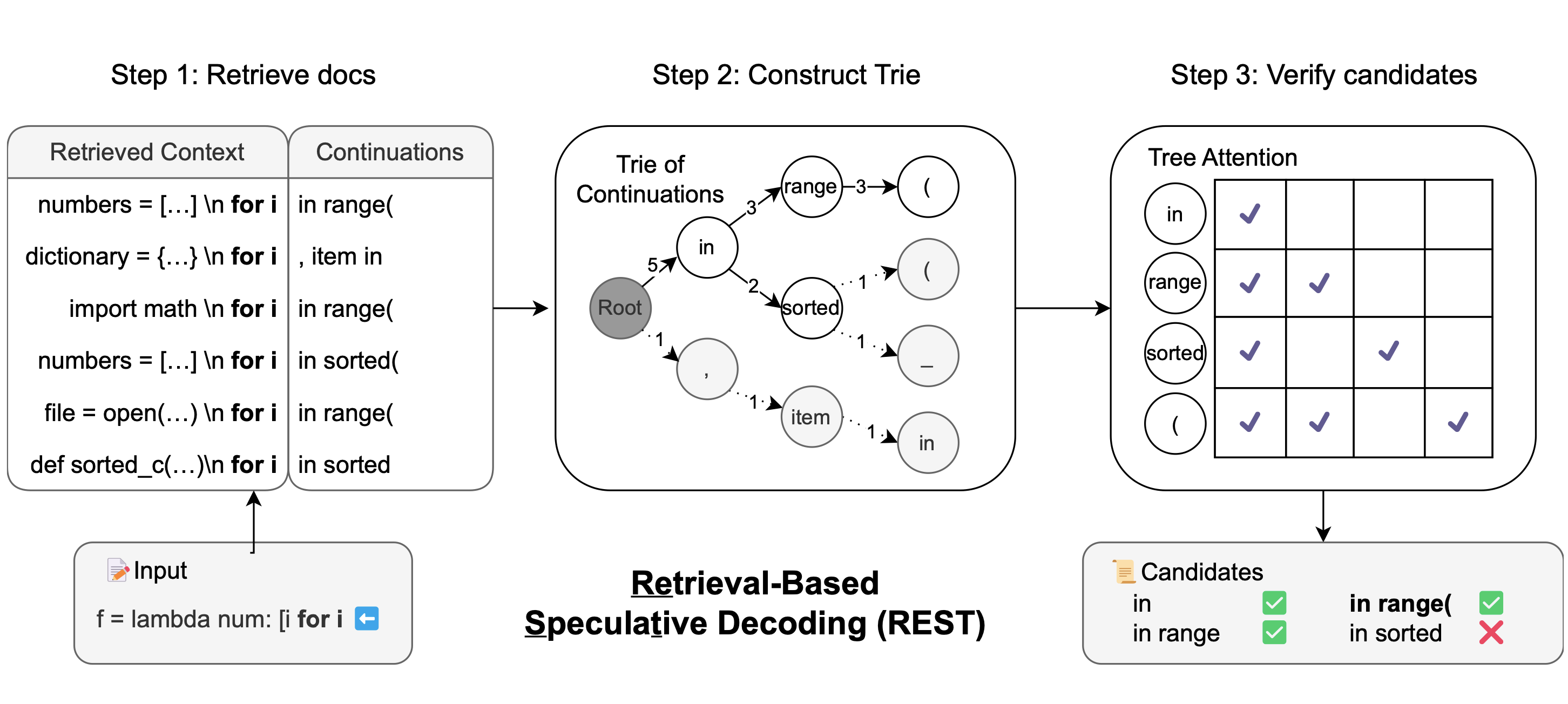

NAACL 2024

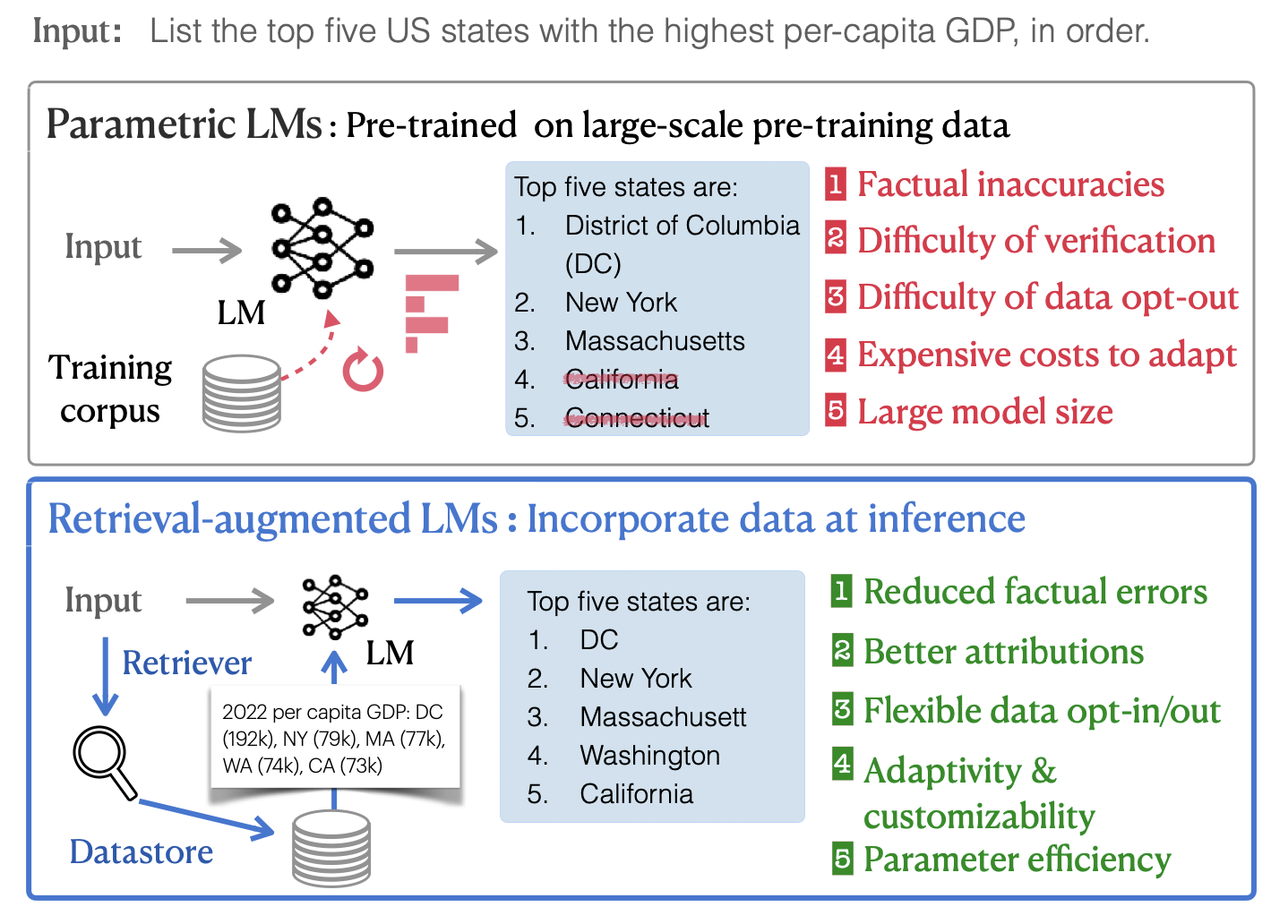

ACL 2023 (Tutorial)